|

|

Next Previous Table of content

9. The philosopher from Birmingham

Aaron Sloman, professor

of philosophy at the School of Computer Science of the University of

Birmingham, counts certainly as one of the most influential theoreticians

regarding computer models of emotions. In an article from 1981 titled "Why robots will have

emotion" he stated:

Like Bates, Reilly or Elliott,

Sloman also represents the broad and shallow approach. For him,

it is more important to develop a complete system with little depth

than individual modules with much depth. It is his conviction that only

in this way a model can be developed which reflects reality to some

extent realistically. Sloman and his coworkers

in the Cognition and Affect Project

have, since 1981, published a lot of works on the topic

"intelligent systems with emotions", which can be divided roughly

into three categories:

To understand Sloman's

approach correctly, one must see it in the context of his epistemological

approach which is not concerned primarily with emotions, but with the

construction of intelligent systems. I shall try to sketch

briefly the core thoughts of Sloman's theory because they form the basis for

the understanding of the "libidinal computer" developed by Ian

Wright (see below). 9.1.

Approaches to the construction of intelligent systems

Sloman's interest lies

not primarily in a simulation of the human mind, but in the development of a

general "intelligent system", independent from its physical

substance. Humans, bonobos, computers and extraterrestial beings are

different implementations of such intelligent systems - the underlying

construction principles are, however, identical. Sloman divides the

past attempts to develop a theory about the function modes of the human

mind (and thus of intelligent systems generally) into three large groups:

Semantics-based, phenomena-based and design-based. Semantics-based approaches

analyze how humans describe psychological states and processes, in order

to determine implicit meanings which are the basis of the use of words

of everyday language. Among them he ranks, among others, the approaches

of Ortony, Clore and Collins as well as of Johnson-Laird and Oatley.

Sloman's argument against these approaches is: "As a source of

information about mental processes such enquiries restrict us to current

`common sense´ with all its errors and limitations." (Sloman, 1993,

p. 3) Some philosophers

who examine concepts analytically, produce, according to Sloman, semantics-based

theories, too. What differentiates them from the psychologists, however,

is the fact that they do not concentrate on existing concepts

alone, but are often more interested in the quantity of all possible

concepts. Phenomena-based approaches

assume that psychological phenomena like "emotion", "motivation"

or "consciousness" are already clear and that everybody can

intuitively recognize concrete examples of them. They try therefore

only to correlate measurable phenomena arising at the same time (e.g.

physiological effects, behaviour, environmental characteristics) with

the occurrence of such psychological phenomena. These approaches, argues

Sloman, can be found particularly with psychologists. His criticism

of such approaches is:

Design-based approaches

transcend the limits of these two approaches. Sloman refers here expressly

to the work of the philosopher Daniel Dennett who essentially shaped the debate around intelligent systems

and consciousness. Dennett differentiates

between three approaches if one wants to make forecasts about an entity:

physical stance , design stance and intentional stance

. The physical stance is "simply the standard laborious

method of the physical sciences" (Dennett, 1996, p. 28); the design

stance, on the other hand, assumes "that an entity is designed

as I suppose it to be, and that it will operate according to that design"

(Dennett, 1996, p. 29). The intentional stance which can be regarded

according to Dennett also as a"sub-species" of the design

stance, predicts the behaviour of an entity, for example of a computer

program, "as if it were a rational agent" (Dennett,

1996, p. 31). Representatives of the

design-based approach proceed from the position of an engineer who tries to

design a system that produces the phenomena to be explained. However, each

design does not require at the same time also a designer:

A design is, strictly

taken, nothing else than an abstraction which determines a class of possible

instances. It does not have to be necessarily concrete or materially

implemented - although its instances can quite have a physical form. For Sloman, the term

"design" is closely linked with the term "niche".

A niche is also a not a material

entity and no geographical region. Sloman defines it in a broad sense

as a collection of requirements to a functioning system. Regarding the development

of intelligent agents in AI, design and niche play a special role. Sloman

speaks of design-space and niche-space . A genuinely intelligent

system will interact with its environment and will change in the course

of its evolution. Thus it moves on a certain trajectory through

design-space . With it corresponds a certain trajectory through

the nichespace , because through the changes of the system it

can occupy new niches:

Sloman identifies

different trajectories through the design-space: Individuals

who can adapt themselves and change, go through so-called i-trajectories

. Evolutionary developments which are possible only over generations

of individuals, he calls e-trajectories . And finally there are

changes in individuals that are made from the outside (for example debugging

software) and which he calls r-trajectories (r for repair). Together these elements

result in dynamic systems which can be implemented in different ways.

For Sloman, one of

the most urgent tasks exists in specifying biological terms such as

niche, genotype etc.more clearly in order to be able to exactly understand

the relations between niches and designs for organisms. This would also

be a substantial progress for psychology:

Sloman grants that the

requirements of design-based approaches are not trivial. He names five

requirements which such an approach should fulfill:

A design-based approach

does not necessarily have to be a top-down approach. Sloman believes

that models which combine top-down and bottom-up will

be most successful. For Sloman, design-based

theories are more effective than other approaches, because:

9.2. The fundamental architecture of an intelligent system

What a design-based

approach sketches, are architectures. Such an architecture describes which states and processes are

possible for a system which possesses this architecture. From

the quantity of all possible architectures, Sloman is particularly interested

in a certain class: "..."high

level" architectures which can provide a systematic non-behavioural

conceptual framework for mentality (including emotional states)."

(Sloman, 1998a, p. 1) Such a framework for mentality

An

architecture for an intelligent system consists, according to Sloman, of four

substantial components: several functionally

different layers, control states, motivators and filters as well as a global

alarm system. 9.2.1. The layers

Sloman postulates that

every intelligent sytem possesses three layers:

The reactive layer is the

evolutionary oldest, and there is a multitude of organisms which only

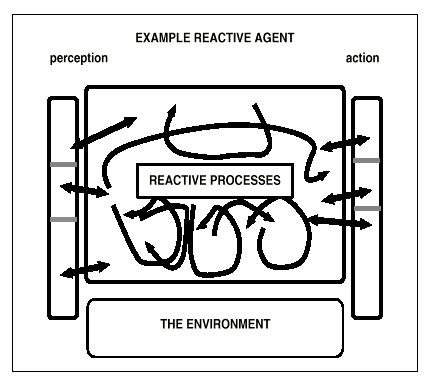

possess this layer. Schematically,

a purely reactive agent presents itself as follows:

Fig. 13: Reactive architecture (Sloman, 1997a,

p. 5) A reactive agent can make

neither plans nor develop new structures.

It is optimized for special tasks;

with new tasks, however, it cannot cope. What it is missing in flexibility, it gains at speed. Since almost all processes are clearly

defined, its reaction rate is high.

Insects are, according to Sloman, examples for such purely reactive

systems, which prove at the same time that the interaction of a number of

such agents can produce astonishingly complex results (e.g. termite

towers). A second,

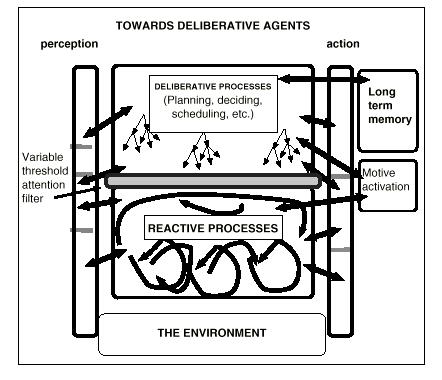

phylogenetically younger layer gives an agent more qualities by far. Schematically, this looks as follows:

Fig. 14: Deliberative architecture (Sloman,

1997a, p. 6) A

deliberative agent can re-combine its action repertoire arbitrarily, develop

plans and evaluate them before execution.

An essential condition for this is a long-term memory in order to

store plans not completed yet or to rest and evaluate later the probable

consequences of plans. The

construction of such plans proceedes gradually and is therefore not

a continuous, but a discrete process.

Many of the processes in the deliberative layer are of serial

nature and therefore resource-limited.

This seriality offers a number of advantages:

at any time it is clear to the system which plans have led to a success, and it can assign rewards accordingly; at the same

time, the execution of contradicting plans is prevented; communication with the long term storage

is to a large extent error free. Such a resource-limited

subsystem is of course highly error-prone. Therefore filtering processes with variable

thresholds are necessary, in order to guarantee the working of the system

(see below). The

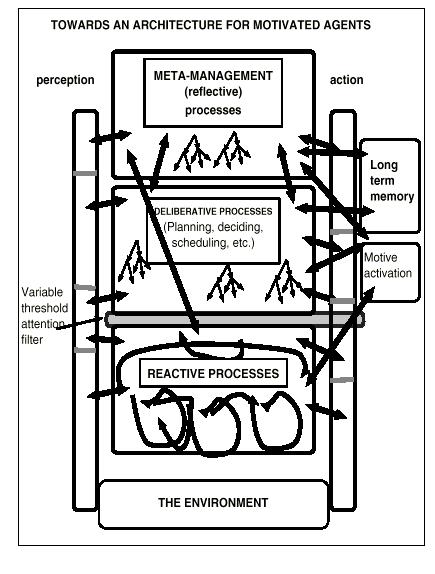

phylogenetically youngest layer of the system is what Sloman calls the meta

management: Fig. 15: Meta management architecture (Sloman,

1997a, p. 7) This

is a mechanism which monitors and evaluates the internal processes of

the system. Such a subsystem

is necessary to evaluate the plans and strategies developed by the deliberative

layer and, if necessary, to

reject them; to recognize recurring patterns in the deliberative subsystem;

to develop long-term strategies; and to communicate effectively with

others. Sloman points

out that these three layers are hierarchical, but parallel and that they also

work parallelly. Like the overall

system, these modules possess their own architecture, which can contain

further subsystems with their own architecture. The

meta management module is everything else butperfect. This is because it does not have comprehensive access to all internal

states and processes, that control over the deliberative subsystem is

incomplete, and that the self evaluations can be based on wrong assumptions.

9.2.2. The control

states

An

architecture like the one outlined the so far contains a variety of control

states on different levels. Some

of them operate on the highest abstraction level, while others are used

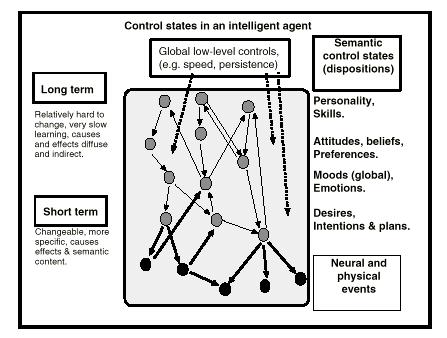

unconsciously with frequent control decisions. The following

illustration gives an overview over the control states of the system:

Fig. 16: Control states of an intelligent

system (Sloman, 1998b, p. 17) Different

control states possess also different underlying mechanisms. Some can be of chemical nature, while

others have to do with information structures. Control

states contain dispositions to react to internal or external attractions

with internal or external actions.

In the context of the overall system, numerous control states

can exists simultaneously and interact with one another. Control

states are known in Folk Psychology under numerous names: desires, preferences, beliefs, intentions,

moods etc.. By the definition

of such states through an architecture, Sloman wants to supply a "rational

reconstruction of a number of everyday mental concepts". Each

control state contains, among other things,

a structure, a transformation possibility and, if necessary,

also a semantic. Sloman illustrates

this by the example of a motivator (see below):

Additionally,

control states differ in the respect whether they can be changed easily

or only with difficulty. Many

control states of higher order, so Sloman, can be modified only in small

steps and over a longer period. Besides,

control states of higher order are more powerful and more influential

regarding the overall system than control states of a lower order. Sloman

postulates a process called circulation, by which the control states

circulate through the overall system.

Useful control states can rise upward in the hierarchy and enlarge

their influence; useless control

states can disappear from the system nearly completely.

The result

of all these processes is a kind of diffusion with which the effects

of a strong motivator distribute themselves slowly into countless and

long-lived control sub-states, up to the irreversible integration

in reflexes and automatic reactions. 9.2.3. Motivators and

filters

A

central component of every intelligent system are motivators. Sloman defines them as "mechanisms and

representations that tend to produce or modify or select between actions,

into the light of beliefs." (Sloman,

1987, p. 4). Motivators

can develop only if goals are present.

A goal is a symbolic structure (not necessarily of physical nature)

which describes a condition, which is to be achieved, received or to

be prevented. While beliefs are defined by the fact that they are representations

which adapt by perception and deliberative processes to reality, goals

are representations which elicit a behavior in order to adapt reality

to the representation. Motivators

are generated by a mechanism which Sloman calls motivator generator

or motivator generactivator.

Motivators are generated due to external or internal information

or produced by other motivators. Sloman

defines a motivator structure formally over ten fields: (1) a possible condition which can be true

or wrong; (2) a motivational

attitude towards this condition; (3)

a belief regarding this condition;

(4) an importance value;

(5) an urgency; (6)

an insistence value; (7) one or more plans; (8) a commitment status; (9) management information and (10) a dynamic

state like e.g. "plan postponed"

or "currently under consideration". In

a later work (Sloman, 1997c), Sloman extended this structure by two

further fields: (11) a rationale,

if the motivator developed from an explicit thought process as well

as (12) an intensity which specifies whether

a motivator already worked on gets further

preference over other motivators. The strength of a

motivator is determined by four variables:

Motivators compete

with one another for attention, the limited resources of the deliberative

sub-system. To make this sub-system work, there must be a mechanism

which prevents new motivators from getting attention at any time. For

this purpose the system posseses a so-called variable threshold attention

filter. The filter specifies

a threshold value a motivator must pass in order to get attentional

resources. This filter is, as already implied by its name, variable and

can be changed, for example by learning.

Sloman illustrates this by the example of a novice driver who

cannot converse with someone else while driving because he has to concentrate too much on the road. After a certain practice this is, however,

possible. The insistence of a

motivator, and thus the crucial variable for the passing of the filter, is a

quickly computed heuristic value of importance and urgency of the

motivator. If a motivator has

surfaced and thus passed the filter, several management processes

are activated. Such a management is necessary because several

motivators always pass the filter simultaneously. These processes are adoption-assessment

(the decision whether a motivator is accepted or rejected); scheduling (the decision, when a plan

is to be executed for this motivator);

expansion (developing plans for the motivator) as well

as meta-management (the decision whether and when a motivator

is to be considered at all by the management).

Sloman's attention

filter penetration theory requires a higher degree of complexity

than the theory of Oatley and Johnson-Laird.

He postulates that not every motivator interrupts the current

activity, but only such which exhibit either a high degree of insistence

or for which the appropriate attention filters are not set particularly

high.

9.2.4. The global alarm system

A

system that has to survive in an environment which changes continually

needs a mechanism with whose assistance it can react without delay to

such changes. Such a mechanism

is an alarm system. An alarm

system is not only of importance for a reactive, but likewise for a

deliberative architecture. For

example, the planning ahead can show a threat or a possibility which

can be answered immediately with a change of strategy. Sloman

draws a parallel between his alarm system and neurophysiological

findings:

The

different layers of the system are influenced by the alarm system, but in

different ways. At the same time they can also pass informations to the alarm

system and thus elicit a global alarm. 9.3.

Emotions

For Sloman, emotions are

not independent processes, but develop as emergent phenomenon from the

interaction of the different subsystems of an intelligent system. Therefore, no necessity

exists for an own "emotion module". A look at psychological emotion theories leads Sloman to the conclusion:

If one, however, views

emotions as the result of an accordingly constructed architecture, then,

according to Sloman, many misunderstandings can be cleared up. A theory which analyzes emotions in

connection with architectural concepts is for him therefore more effective

than other approachese:

The different layers of

the outlined architecture support also different emotions. The reactive layer is responsible for

disgust, sexual arousal, startle and fear

of large, fast approaching objects. The deliberative layer is

responsible for frustration through failure, relief through danger avoidance,

fear of failure or pleasant surprise by a success. The meta-management layer supports shame, degradation, aspects

of mourning, pride, annoyance. Sloman's approach

intentionally disregards physiological accompaniments of emotions. For him these are only peripheral

phenomena:

Sloman also does not

accept the objection that emotions are inseparably connected with bodily

expressions. He counters with the

argument that these are only

"relics of our

evolutionary history" which are not essential for emotions. An emotion derives its meaning not from

the bodily feelings which accompany it, but from its cognitive content:

He argues in a similar way

regarding a number of non-cognitive factors which could play a role

with human emotions, for example chemical or hormonal processes. He asks whether the affective states elicited

by such non-cognitive mechanisms are really so different from those

which are produced by cognitive processes:

How, then, do emotions develop

in Sloman's intelligent system? Basically,

he differentiates between three classes of emotions which correspond

to the three layers of his system. On the one hand, emotions can develop through internal processes

within each of these layers; on

the other hand by interactions between the layers. Emotions are accompanied

frequently by a state which Sloman calls perturbance. A perturbance is given if the overall system

is partially out of control. It

arises whenever a rejected, postponed, or simply undesirable motivator

emerges repeatedly and thus prevents or makes more difficult the management

of more important goals. Of crucial importance here

is the insistence value of a motivator which for Sloman represents a

dispositional state. As such

a highly insistent motivator can elicit perturbances even then if it

has not yet surpassed the filter or is not yet worked on actively.

Perturbances can be occurrent

(attempt to attain control over attention) or dispositional (no attempt

to attain control over attention). Perturbant states

differ by several dimensions: Duration,

internal or external source, semantic content, kind of disruption, effect

on attentional processes, frequency of disruption, positive or negative

evaluation, development of the state, fading away of the state etc.. Perturbances are, like

emotions, emergent effects of mechanisms whose task it is to do something

else. They result from the

interaction of

For the emergence of perturbances,

one thus does not require a separate "perturbance mechanism"

in the system; also questions

about the function of a perturbant state are not meaningful from this

point of view. Perturbances, however, are not to be equated with emotions;

they are rather typical accompaniments of states which are generally

called emotional. For Sloman, emotional

states are, in principle, nothing else than motivational states caused by

motivators.

A further characteristic

of emotional states consists in the production of new motivators. If, for example, a first emotional state

resulted from a conflict between a belief and a motivator, new motivators

can develop which lead to new conflicts within the system. 9.4.

The implementation of the theory in MINDER1

Sloman and his working group

have developed a working computer model named MINDER1 in which his architecture

is partly implemented. MINDER1

is a pure software implementation; there is thus no crawling room with real robots. The model is described here very shortly; a detailed description can be found in [Wright and Sloman, 1996].

MINDER1 consists of

a kind of virtual crawling room in which a virtual nanny (the minder)

has to watch out for a number of virtual babies.

These babies are "reactive minibots" which always move

around in the crawling room and are threatened by different dangers: they can fall into ditches and be damaged

or die; their batteries can

run dry, thus they have to get to a recharging station;

if the batteries are too much emptied, they die;

overpopulation of the crawling room turns some babies into rowdies

which damage other babies; damaged

babies must be brought into the hospital ward to be repaired; if the damage is too great, the baby dies.

The minder

now has different tasks: It

must ensure that the babies lose no energy, that they do not fall into

a ditch or are threatened by other dangers.

For this purpose it can build, for example, fences to enclose the babies

therein. It must lead

Minibots whose energy level is dangerously low to a recharging station

or others away from a ditch as far as possible.

This

variety of the tasks ensures that the minder must always produce new

motives, evaluate them and act accordingly.

The more Minibots enter the crawling room, the less the efficiency

of the minder. The architecture

of MINDER1 corresponds to the basic principles described above.

It consists of three subsystems which contain themselves a number

of further subsystems. 9.4.1.

The reactive sub-system

The reactive sub-system

contains four modules: Perception,

belief maintenance, reactive plan execution, and preattentive

motive generation. The perception subsystem

consists of a data base which contains only partial information about

the environment of the minder. The

system functions within a certain radius around the minder, but can

not detect, for example, hidden objects.

An update of the data base looks as follows:

[new_sense_datum time 64 name minibot4

type minibot status alive distance 5.2 x 7.43782 y 12.4632 id 4 charge 73

held false] This means: Information at time 64 about the minibot

named minibot4: It lives, is

situated at a distance of 5.2 units from the minder, has the ID 4 and

the charge 73 and is not held by another agent.

The belief maintenance

subsystem receives its information on the one hand from the informations

of the perception subsystem, on the other hand from a belief data base

in which, for example, is stored that fences are things with which one

can secure a ditch. In order to delete wrong beliefs from the

system, every belief is assigned a

defeater. If the defeater is evaluated as true,

then the respective belief is deleted from the respective data base.

An example: [belief time 20 name

minibot8 type minibot status alive distance 17.2196 x 82.2426 y 61.2426 id 8

charge 88 held false [defeater [[belief == name minibot8

== x ?Xb y ?Yb ==] [WHERE

distance(myself.location, Xb,Yb) < sensor_range] [NOT new_sense_datum ==

name minibot8 ==]]]] The defeater in

this case means: "IF I possess a

belief regarding minibot8 AND I have no new perception data of minibot8 AND I

am at a position, in which I should

have according to my belief

new perception data of minibot8 THEN my belief is wrong." The subsystem of the

reactive plan execution is necessary, so that the minder can react fast to

changing external conditions. If it

has the plan, for example, to move from one position in the crawling room to

another, then this plan should be executed without using too many resources. To achieve this, MINDER1

uses a method which was developed by Nilsson (1994) and is called teleo-reactive

(TR) program formalism. MINDER1

has thirteen of such TR programs which enable it, for example, to look

for objects or to manouvre in the room. In order to use TR programs, the minder first needs to have goals. These are produced by the subsystem for pre-attentive motive generation which consists of a set of generactivators. An example is the generactivator G_low_charge, which searches through the belief database after information about babies with low charge. If it finds such an information, it forms from it a motive and deposits it in the motive data base. An example: [MOTIVE motive [recharge minibot4] insistence 0.322 status sub] The status sub

denotes that the motive has not yet passed the filter. MINDER1 contains

eight generactivators which express its different concerns. 9.4.2. The deliberative

sub-system

The deliberative sub-system

of MINDER1 consists of the modules filter, motive management, and plan

excution. All these modules

are shallow, thus possess little depth of detail.

The filter threshold in

MINDER1 is a real number between 0 and 1.

A motivator with the status sub can pass it, if its insistence

value is higher than the value of the filter threshold. The status of the motivator

then changes from sub to surfacing. A motivator which does not succeed in passing the filter during

a time cycle, can be sent back by the generactivator with a newly computed insistence

value. All motivators which have

passed the filter are processed by the motive management and receive the

status surfaced. The motive management works with the three

modules deciding, scheduling, and expanding. The module "deciding"

specifies whether the motivator is to be worked on immediately or later. If it is processed directly, it receives the

status active; if it is processed

later, it receives the status suspended. Such a decision is possible

mostly only then if the motivator has been submitted to a closer inspection,

This is done by the module "expanding". The motivator is expanded

in a way that it contains a meta plan. An example: [MOTIVE motive [save ditch1 minibot5]

insistence 0.646361 status active] is partially expanded to:

[MOTIVE motive [save

ditch1 minibot5] insistence 0.646361 status active plan [[decide]

[get_plan]] trp [stop] importance undef] The steps decide

and get_plan of the meta plan are calls for management processes.

From the module "expanding"

the motivator can be executed directly (status: executed), a stored plan can be called up (status: active, meta), or the motivator

can be passed on to the module "deciding" (status: active, meta). In

the module "deciding", the active motivator is submitted to

a routine which specifies its importance.

This importance is determined heuristically from the insistence

value; the determination of the urgency of a motivator

is not yet implemented in MINDER1.

As a result, it can either be rejected (status:

suspended, meta) or processed further (status:

active, meta).

Finally, the

subsystem of plan execution is implemented in a set of TR programs which can call

up other TR programs. MINDER1 contains

nine action primitives. 9.4.3. The meta-management

sub-system

The meta-management

sub-system of MINDER1 contains two modules:

the change of the filter threshold value and the discovery of

perturbant states. The filter threshold

value module observes the number of motivators which succeed in passing

the filter. If they are more than three, the threshold value is raised until

only three or fewer motivators are processed. Motivators with the status suspended which have already

been processed, are assigned the status sub again if their insistence

value is lower than the threshold value of the filter. Only if less than three motivators are worked upon, the threshold

value is lowered again. The interaction of

this module and the generactivators which compute the insistence values

of the motivators anew, results in a continuous movement of the motivators from the pre-attentive into the attentive

state and back. The system is, in

this form, not yet able to produce perturbances.

Sloman speaks in connection with MINDER1 therefore of "proto-perturbances". In order to produce proto-perturbances, Sloman

uses a trick which becomes necessary by the lack of complexity of the

system. Motivators which refer

to damaged minibots (baby), receive per definitionem a high insistence

value; the management processes however assign a

lower degree of importance to these motivators - thus a deviation from

the normal behaviour of the management processes, during which importance

is evaluated according to the insistence. The respective module in

the meta-management sub-system was designed in such a way that it calculates

the rate of rejection of motivators.

If this value passes a certain threshold, then a proto-perturbant state

has occurred. MINDER 1 does indeed show

such proto-perturbances. However, the

sub-system cannot deal further with this information; for this, the entire

system is not yet developed enough. 9.5. Summary and evaluation

Sloman's

theoretical approach is certainly one of the most interesting regarding

the development of emotional computers.

It is less his specific interest in emotions but rather his stressing

of the architecture which opens up new perspectives.

Sloman

follows through theoretically most consistently what others had speculated

about as well: that there is

no fundamental difference between emotion and cognition. Both are aspects of control structures of

an autonomous system. A

detailed view of Sloman's work from 1981 to 1998, however, shows a number of ambiguities. For example, the differentiation between

the terms goal, motive, and motivator is not clear,

because they are used by him quite interchangeably. Also

it does not become clear what function perturbances have exactly with the emergence of emotions and how they

are connected with the global alarm system postulated by him. It is interesting that in his earlier work this alarm system is scarcely mentioned,

but mainly perturbances; in

his later work one finds nearly the opposite. The proof

which Sloman wanted to deliver with MINDER1 is, in its present form, not

convincing. Neither do perturbances develop

from the interaction of the elements of the system (the programmers had to

help a lot to produce even proto-perturbances), nor can one draw from it far-reaching

conclusions about human emotions. It

is nevertheless the theoretical depth and width of Sloman's work which

can lend new impulses to the study of the emotions.

His combination of design-oriented approach, theory of evolution

and discussion of virtual and physical machines is deeper than all other

approaches for the construction of autonomous agents.

Next Previous Table of content

|

|