|

|

Next Previous Table of content

8. Advancement and implementation of Toda's model

In the last years, the

interest in Toda's theoretical approach has risen again. This can be seen,

among other things, by the frequency with which he is quoted approvingly, for

example by authors such as Frijda, Pfeifer, or Doerner. The increased reception

of Toda conincides with a renewed interest in the construction of real

autonomous agents. In this respect there have been several approaches

to modify Toda's model on the basis of recent findings from emotion

psychology and implement it partly in a computer simulation or a robot.

The works of Aubé, Wehrle, Pfeifer,

Dörner, and others are presented briefly in this chapter. 8.1.

The modification of Toda's urges by Aubé

Michel Aubé pointed out

the problems inherent in Toda's system of urges (Aubé, 1998). On the one

hand he criticizes Toda's classification of urges : Thus grief for

example is classified as one of the rule observance urges. On the

other hand he points out that Toda classifies a number of urges as

emotions which one would call rather a need (e.g. hunger). Finally he

notes that some urges represent what Frijda calls action tendencies and not the emotions themselves,

for example rescue or demonstration . Aubé therefore suggests

first of all to give up the definition of urges as emotions but to

understand them rather as motives. Aubé differentiates these motives in two

classes: Needs such as hunger or thirst represent a motivational control

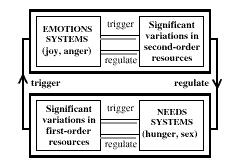

structure, which make resources of the first order available and their management possible. Emotions

such as annoyance or pride are motivational control structures, which create,

promote or protect resources of second order. Such control structures of

second order are, for Aubé, social obligations ( commitments ).

Fig. 6: Two control layers for the

management of resources (Aubé, 1998, p. 3) Commitments are for Aubé the central factor

with emotions:

Within autonomous

agents, commitments represent dynamic units, active subsystems

which look out after significant events which are of importance for

their fulfilment or injury. They register as variables, for example,

who is obligated to whom, until when and about what).

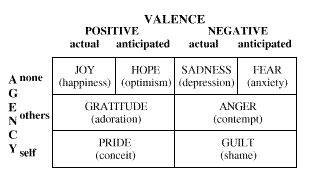

Aubé has developed

a general call matrix for fundamental classes of emotions combining

the approaches of Weiner and Roseman. He arranges Toda's social urges

in this matrix.

Fig. 7: Call structure for fundamental

emotions (Aubé, 1998, p.4)

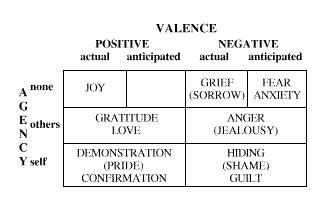

Fig. 8: Allocation of Todas urges to

the call structure for fundamental emotions (Aubé, 1998, p. 5) Aubé comes to the

conclusion that his modified version of Toda's urges agrees with the

most important theories of motivation and with his theory of emotions-as-commitment-operators.

Such a control structure is for him a substantial condition, in order to

design cooperative adaptive agents which can move independently in a complex

social environment. 8.2.

The partial implementation of Toda's theory by Wehrle

Wehrle converted the basic

elements of Toda's social Fungus Eater into a concrete computer model

(Wehrle, 1994). As a framework for this he used the Autonomous Agent

Modeling Environment (AAME). The AAME was developed specifically

to investigate psychological and cognitive theories of agents in concrete

environments. To AAME belongs an object-oriented simulation language

with which complex micro worlds and autonomous systems can be modelled.

Moreover, a number of interactive simulation instruments belong to the

system with which the inspection and manipulation of all objects of

the system are possible during execution. For the concrete implementation

of social Fungus Eaters some additional assumptions were necessary which

cannot be found in this way in Toda's work: They keep a certain distance

to each other, in order to avoid conflicts around food finds or ineffective

ore collecting. On the other hand they keep in loose contact, in order

to be able to help one another in an emergency. In place of pre-programmed

urges Wehrle's model uses a cybernetic control loop in which the

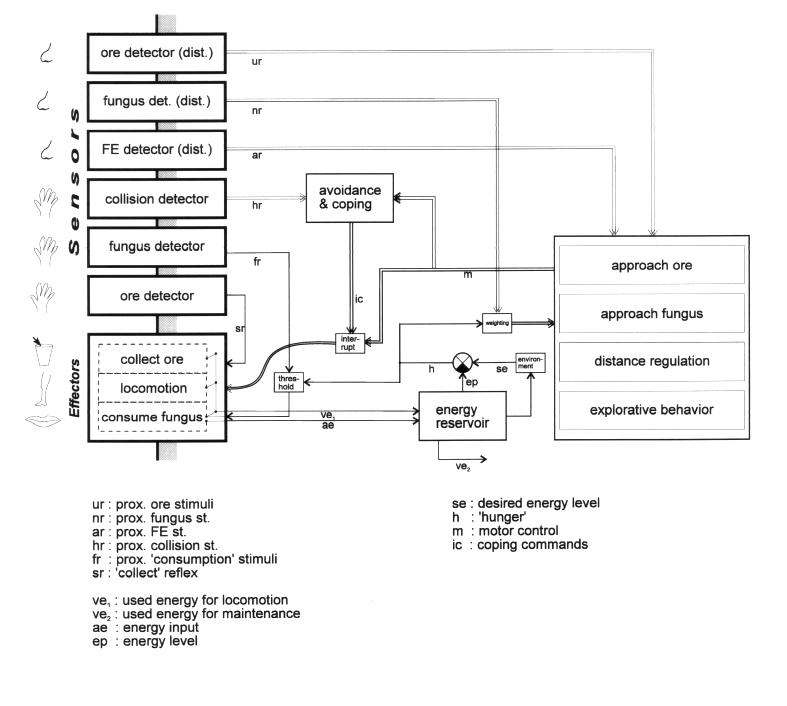

energy balance of an agent is linked with hedonistic elements. The complete model of the

social Fungus Eaters looks as follows:

Fig. 9: Model of a social Fungus Eater

(Wehrle, 1994) The emergent behaviour of

the agents in his model is described by Wehrle as follows:

Surely this implementation

is only a small first step to actually develop an autonomous system

based on Toda's principles. It shows however that it is in principle

possible and that already in a very restrictive implementation first

emergent effects show themselves. 8.3.

Pfeifer's "Fungus Eater principle"

Pfeifer has described the

construction of autonomous agents after the Fungus Eater principle, based on

Toda's model. (Pfeifer, 1994, 1996). His starting point was the

only partial success of his model FEELER as well as other attempts of the

"classical" AI to develop computer models for emotions. He

formulates his criticism in six points:

Pfeifer's Fungus Eater

principle assumes that intelligence and emotions are characteristics of

"complete autonomous systems". Therefore he concerns himself with

the development of such systems. This way, one can also avoid to lead a

fruitless debate about emotions and their function:

The Fungus Eater

principle means at the same time that one must observe an accordingly

designed agent over a longer period of time in order to see which behaviour develops

under which conditions. Building on these

remarks, Pfeifer developed two models of an autonomous Fungus Eater: a Learning Fungus Eater with a physical

implementation as well as a Self-sufficient Fungus Eater as a pure

software simulation. The Learning Fungus

Eater is a small robot, equipped with three kinds of sensors: proximity sensors determine the

distance to an obstacle (high activation with proximity, low with

distance); collision detectors

are activated with collisions; target sensors can detect a goal,

if they are within a certain radius around this goal. The robot has two wheels which are

propelled independently by two motors.

The Learning Fungus

Eater has two reflexes: collision-reverse-turn

and if-target-detected-turn-towards-center-of-target. The control architecture consists of a

neural net which can be changed partially by Hebbian learning:

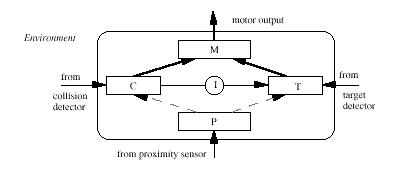

Fig. 10: Control architecture of a Learning

Fungus Eater (Pfeifer, 1994, p. 10) The entire control system

consists of four layers: the collision

layer, the proximity layer, the target layer, and the engine

layer. The only task of the robot

is to move around. Its environment

looks as follows:

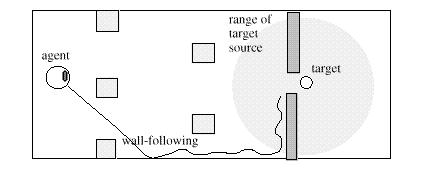

Fig. 11: Environment of the Learning

Fungus Eater (Pfeifer, 1994, p.10) What happens now if the

robot is activated? First it will hit

obstacles. Each reverse-and-turn

activity makes possible Hebbian learning between the proximity layer

and the collision layer, until the robot has learned to evade obstacles. It looks from the outside as if the robot can

anticipate obstacles. Pfeifer points

out that usually for such an "anticipating" behaviour an

architecture with several layers is suggested, while one layer is actually

sufficient. Pfeifer explains two

further phenomena of the robot:

This demonstrates clearly,

according to Pfeifer, that a system without strategies, without anticipation

mechanism, without knowledge over sources of food can show a behaviour which

is classified by observers as purposeful and motivated - but which results,

however, only from the interaction of the system with its environment. The Learning Fungus

Eater is in as much no complete autonomous system as it cannot support

itself. This is why Pfeifer

developed the Self-sufficient Fungus Eater, at first only as

a software simulation. The agent is in this

case is situated in a "Toda landscape" with fungi as food

and ore for exploitation. The action selection here is clearly more

complicated: the agent can explore

(look for ore or food), it can eat or collect ore.

What it does is determined by the central variables "energy

level" and "collected ore quantity". For the action selection in each given situation the agent has

only one rule: "If the

agent is exploring and energy level is higher than amount of ore collected

per unit time, it should ignore fungus (but should not ignore ore),

if not it should ignore ore (but should not ignore fungus)." (Pfeifer,

1994, p. 12) Here also, according to

Pfeifer, the result for observers is a state to which they attribute a high

emotional intensity

Pfeifer cautions

in the same essay that these few findings can naturally not explain what

emotions really are. However, he

expects a lot from the further pursuit of this approach, even if it is very

time intensive; more, in any case, than from computer models which deal with an

isolated emotion model. 8.4.

The approach of Dörner et al.

Dörner has developed a

computer model that integrates cognitive, motivational, and emotional

processes (Dörner et al., 1997; Dörner and Schaub, 1998; Schaub, 1995, 1996):

the PSI model of intention regulation. Within the framework of PSI he

developed the model "EmoRegul" which is concerned particularly with

emotional processes. PSI is part of a theoretical

approach which Dörner calls "synthetic psychology". This approach tries to analyze, by designing

psychological processes, how these processes can be represented as processes

of information processing. Dörner's

starting point is similarly to Toda's if he writes, "..that in

psychology one may not divide unpunished the different psychological

processes into their components "(Dörner and Schaub, 1998,

p.1). Core of

the PSI model is the concept of "intention". Schaub defines intention as an internal

psychological process, "...

defined as an ephemere structure consisting of an indicator for a state of

lack (hunger, thirst etc..) and

processes for the removal or avoidance of this state (either ways to the consummational

final action or ways to avoidance, in the broadest sense "escape")." (Schaub,

1995, p. 1) The PSI

agents are conceived as steam engines which move in a simulated environment

with watering holes, gasoline stations, quarries etc.. In order to be able to move, the agents need both gasoline and

water which are to be found in different places, however.

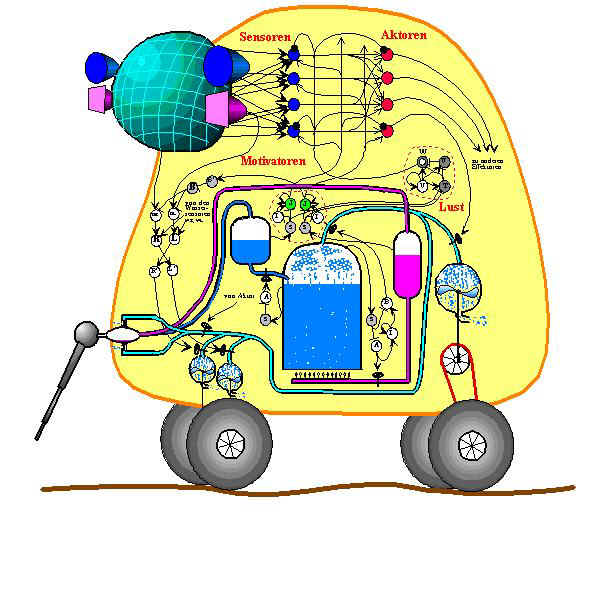

Fig. 12: Schematic structure of the PSI system

(Dörner and Schaub, 1998, p. 7) A PSI agent has a set of

needs which are divided in two classes:

material needs and informational needs. Among the material needs are the

intake of fuel and water as well as the avoidance of dangerous situations

(e.g. falling rocks). Among the

informational needs are certainty (an expectation fulfilled) and uncertainty

(an expectation unfulfilled), competence (fulfilment of needs) and affiliation (need after social

contacts). State of lack can

be likened in PSI to a container whose content has fallen below a certain

threshold value. The difference between actual and desired state Dörner

calls desire. "A need signals thus that a desire of

a certain extent is present."

(Dörner and Schaub, 1998, p. 10) Such a state of lack activates

a motivator whose activation degree is the higher, the larger the deviation

from the desired value and the longer it already persists. The motivator now tries to take over the

action control in order to eliminate this condition by a consummational final

action. For this purpose a goal is

aimed at which is known to the motivator from past experiences. With goals, motives also

develop in PSI, which represent instances which initiate an action,

direct it to a certain goal, and maintain it until the goal is achieved. Since several motivators

always compete with one another for the action control, the system has

a motive selector which decides, with the help of a fast expectation

x value calculation, which motivator possesses the largest motive strength

and thus is to receive the advantage. The value of a motivator is determined by

its importance (size of the deviation from the desired value) and urgency

(available time until the removal of the actual condition); expectation is determined by the ability

of the agent to actually satisfy this need (probability of success). PSI has also a memory

which consists of sensory and motor "schemata". Sensory schemata represent the knowledge of the agent about

its environment; motor schemata are

behaviour programs. There is no

distinction between different kinds of memory in PSI. The action control

in PSI takes place through intentions which are defined operationally

as combination of the selected motive with the informations which are

linked with the active motivator in the memory network.

These informations concern the desired goals, the operators or

action schemata to be applied, the knowledge about past, futile approaches

to problem solving as well as the plans which PSI produces with the

help of heuristic procedures. All

these informations consist of neural nets;

an intention as a bundling of all these informations makes up

the working memory of PSI. The central mechanisms of

emotion regulation in PSI are the motivators for certainty and competence,

thus two informational needs. Active

certainty or competence motivators elicit certain actions or increase the readiness for it: "A dropping certainty leads to an increase of the extent of "background control ". This means that PSI turns more frequently than usual away from its actual intention turns to control the environment. Because with small predictability of the environment one should be prepared for everything (...) Furthermore, with dropping certainty the tendency to escape behaviours or to behaviours of specific exploration rises (...) Not so extreme cases of escape are called information denial; one does simply not regard the areas of reality anymore which have proved themselves as unpredictable. Part of this "retreat" from reality is that PSI becomes more hesitant in its behaviour with dropping certainty, does not turn to actions so fast, plans longer than it would under other circumstances, and is not as "courageous" when exploring." (Dörner and Schaub, 1998, p. 33) Emotions thus

develop with PSI not in their own emotion module, but as a consequence of rule

processes of a homoeostatic system.

Schaub expresses it in such a way:

"What we call with humans emotions, are the different ways

of action organization, connected with associated motivations." (Schaub, 1995, p. 6) Dörner

grants that a variety of emotions is not yet representable in PSI because the

system is missing a module for introspection and self reflection. However, this is, according to Dörner, only

a matter of the refined implementation of the model and thus no problem in

principle. Dörner's

model exhibits a set of similarities with other models. Like Toda and Pfeifer, his starting point

is to design a completely autonomous system without a separate emotion

module. As with Frijda and Moffat,

PSI contains a central memory which is accessible to all modules for

reading and modification at any time:

"The

use of common memory structures permits all processes to receive information

particularly over the intentions waiting for processing. Each subprocess thus knows, e.g., importance

and urgency of the current intention." (Schaub,

1995, p. 6) PSI works

not with explicit rules, but is a partially connectivist system which

produces emotions by self organization. 8.5. Summary

and evaluation

The modeling approaches

of Pfeifer and Wehrle clearly show which importance Toda's theory possesses

for the construction of autonomous agents who could not survive without

the control function of emotions. In

place of pre-defined emotion taxonomies "wired" into the model,

both authors decides on the opposite approaches:

Their models contain only the most necessary instructions for

the agent. While Wehrle still

links certain events with hedonistic components, Pfeifer does completely

away with them. In the end,

both systems show a behaviour which can be interpreted as "emotional"

by an observer. Both models have the

disadvantage that they say not all too much about emotions in computer

agents - for this, a longer observation period is necessary in which

the agents can develop. Thus

the problem is avoided to program emotions arbitrarily into a system; on the other hand, a new discussion is opened over whether a behaviour

which appears to an observer as emotional is also actually emotional.

Here the argument turns again into a philosophical one.

Both approaches follow the

assumption of emotions as emergent phenomena consequently to the end

- with all pro and cons which result from it. Aubé's attempt

to link Toda's urges with his theoretical emotion model holds

its own problems. He correctly

recognizes a set of inconsistencies in Toda's urges model and

tries to eliminate these. He

places, however, his own definition of emotions as social phenomena

into the foreground. Aubé's fusion of Weiner's and Roseman's theories,

which he combines then with his and Toda's approach into one, raises

fundamental problems whose discussion would be too far-leading here. The model

of Dörner, finally, is similar in many respects to the approaches of Pfeifer

and Wehrle (and thus Toda): Emotions

are understood as control functions in an autonomous system. Dörner links this approach with a

homoeostatic adjustment model. Also Dörner

does not define emotions explicitly;

emotional behaviour develops due to the change of two parameters which

he calls "certainty" and "competence". In this case, too, emotional behaviour is

attributed to the system only from the outside. Dörner, too, regards emotions as emergent phenomena which do

not have to be integrated into a system as as a separate module. It remains to be seen in which direction PSI

(and thus Dörner's emotion model) develops if the system receives an

introspection module. Next Previous Table of content

|

|